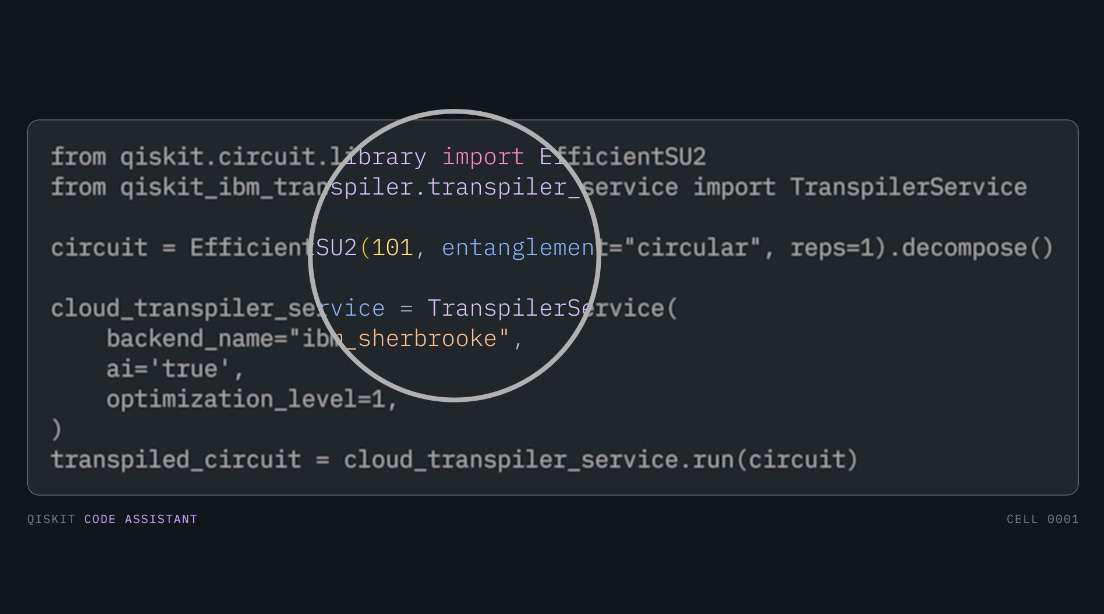

Researchers from Princeton and the University of Chicago have made significant strides in developing a code generation model specifically designed for quantum computing tasks. The IBM Qiskit Code Assistant, built on top of IBM’s Granite Code model, has been trained on a massive dataset of 8 billion parameters to generate high-quality code.

To further improve its performance, the team extended its training with additional data from Qiskit tutorials, Python scripts, Jupyter notebooks, and open-source GitHub repositories.

The model was evaluated using the Qiskit HumanEval dataset, a novel benchmarking tool developed by IBM experts and members of the Qiskit community. The results show that the Qiskit Code Assistant significantly outperformed other state-of-the-art code generation models in quantum coding tasks.

This breakthrough has the potential to revolutionize the field of quantum computing, making it more accessible and efficient for developers and researchers alike.

The Qiskit Code Assistant, built on top of the IBM Granite™ Code model, has shown impressive performance in generating quantum code. The addition of synthetic data and careful filtering of deprecated code have likely contributed to its success.

LLMs + Quantum Computing

Large language models (LLMs) are a type of “generative AI” that utilizes statistical analysis of data to create text, images, and various other forms of content. These models are trained on vast datasets to predict the next word in a sequence based on prior input and patterns identified in their training data. LLMs assign probabilities to potential next words and output the one deemed most likely.

This approach has made LLMs a powerful tool for generating classical software code. However, generating quantum code is more challenging.

Training an LLM on code examples alone is insufficient for producing high-quality quantum code, as the model also requires foundational knowledge of quantum computing to interpret user requests correctly. For instance, if a user asks the model to generate code for the Deutsch-Jozsa algorithm, the phrase “Deutsch-Jozsa algorithm” may not appear in the code itself, so the model needs contextual understanding to link the request with the correct code output.

Another difficulty in quantum code generation is the limited availability of training data due to the smaller size of the quantum computing field compared to classical computing. Furthermore, the field of quantum computing evolves rapidly, with libraries frequently updated with new techniques. As a result, quantum code generation models must be updated regularly to remain effective, and older training data may quickly become outdated.

What’s particularly interesting is the creation of the Qiskit HumanEval dataset, a benchmarking tool designed explicitly for evaluating the performance of LLMs in generating quantum code. This fills a significant gap in the research landscape, as there was previously no standardized way to evaluate the quality of quantum code generated by LLMs.

The results of the comparison between the Qiskit Code Assistant and other state-of-the-art open-source code LLMs are striking. The granite-8b-qiskit model significantly outperformed its competitors in generating quantum code, passing 46.53% of the benchmarking tests in the Qiskit HumanEval dataset.

The decision to open source the granite-8b-qiskit LLM and the Qiskit HumanEval dataset is a welcome move. This will enable researchers and developers worldwide to build upon these resources, driving further innovation and improvement in the field.

As we move forward, it’s essential to continue updating and refining these models to ensure they remain relevant and valuable for users. The plan to solicit feedback from the community is crucial in identifying areas for improvement and ensuring the dataset meets the diverse needs of quantum computing practitioners.

Overall, this development has significant implications for the advancement of quantum computing research and its applications. Now, Even Quantum has its chatGPT moment.

More information

External Link: Click Here For More